AI

- Artificial Intelligence has a way to go before it really changes the

way that ships operate and goods are delivered autonomously. The AI wouldn't just manage existing software but would actively develop and implement its own upgrades to optimize performance with the current hardware and address any driver needs. This aligns with the emerging concept of AI Operating Systems that can self-improve over time.

Including designing CPU processors to overcome any performance shortfall

identified.

Framing the Evolution in Stages: The narrative focuses on specific upgrades or adaptations "Hal" undergoes in response to particular threats or technological advancements. This can make the concept more digestible than an abstract "never-ending process."

Highlight the Backup and Recovery as a Key Defense: In a cyber

war, the ability to quickly recover from attacks or failed upgrades is paramount. "Hal's" intelligent backup system allows the Elizabeth Swann to remain operational even under duress. Hal is a compelling evolution of the AI concept, bringing in the idea of a truly autonomous and self-sustaining computer system.

EVOLVING ARCHITECTURES: EXPLORING THE

POTENTIAL OF MODULAR AND UPGRADEABLE COMPUTER SYSTEMS

Thesis by Professor Douglas Storm

1. Executive Summary

The concept of a computer system where core processors can be easily swapped and upgraded, much like today's accessory processors, presents an intriguing vision for the future of computing. This report delves into the feasibility and potential of such a modular and evolutionary system. By examining the historical context of modularity in both software and hardware, analyzing the intricacies of current computer architecture, exploring the landscape of heterogeneous and reconfigurable computing, and weighing the potential benefits against the inherent challenges, this analysis aims to provide a comprehensive perspective on the user's innovative idea. Furthermore, the potential role of artificial intelligence in managing and optimizing a system with dynamically changing hardware configurations will be considered. While significant technical and logistical hurdles exist, the enduring appeal of modularity, coupled with advancements in related technologies, suggests that the concept of an evolving computer warrants continued attention and exploration.

2. A Look Back: The History of Modular Computing

The principle of modularity, breaking down complex systems into smaller, interconnected units, has a rich history in the realm of computing, manifesting in both the software and hardware domains.

Modularity in Software: The evolution of modular design in software represents a fundamental shift in how complex systems are conceived and developed. Initially, software architectures were largely monolithic, characterized by deeply interwoven components that made adaptation and troubleshooting a significant undertaking. The increasing complexity of software systems created a pressing need for more organized and manageable development practices, setting the stage for the emergence of modular approaches. Pioneers like Ivan Sutherland, through his work on Sketchpad in the early 1960s, laid foundational principles for interactive computer graphics and significantly influenced future software designs by introducing concepts such as object-oriented programming and hierarchical structures, which are integral to modular design. Subsequently, companies like Autodesk, with the introduction of AutoCAD, embraced an open architecture that allowed users to customize and extend the software's capabilities through modules and extensions. Similarly, PTC's development of Pro/ENGINEER marked an evolution in design software by introducing parametric and feature-based modeling, a flexibility facilitated by the modular nature of its architecture.

The formalization of modular programming as a distinct paradigm occurred in the late 1960s and 1970s, closely related to structured and object-oriented programming, all sharing the goal of simplifying the construction of large software programs through decomposition. Key concepts that underpinned this movement included information hiding and separation of concerns, emphasizing the creation of well-defined boundaries between modules. This era saw the development of programming languages that explicitly supported modularity, such as Modula, which was designed from its inception with modular principles in mind. Other early modular languages like Mesa and its successor Modula-2 further refined these concepts and influenced later languages. The adoption of modular programming became widespread in the 1980s and continues to be a fundamental principle in virtually all major programming languages developed since the 1990s, including languages like Python, which prominently featured modules as a primary unit of code organization from its inception. The benefits of modularity extend beyond traditional software development, becoming increasingly crucial in contemporary computing paradigms such as cloud computing, where modular architectures enable distributed, scalable, and remotely accessible applications. Artificial intelligence also leverages modularity through the development of intelligent and adaptable software components, while the Internet of Things necessitates highly modular architectures to effectively manage the complexity and variability of numerous connected devices. The historical trajectory of software development clearly demonstrates the profound advantages of modularity in managing complexity, fostering code reuse, and enabling the continuous evolution of systems. This success in the software domain provides a strong conceptual basis for exploring the potential of modularity in computer hardware, suggesting that similar principles could address the increasing complexity and rapid obsolescence observed in hardware components.

Modularity in Hardware: The concept of modular design extends beyond software into the realm of computer hardware, where the fundamental aim is to create computers with easily replaceable parts that utilize standardized interfaces. This approach offers the potential for users to upgrade specific aspects of their computer systems without the need to replace the entire machine, mirroring the flexibility seen in other modularly designed products such as cars and furniture. Early attempts at realizing modular PCs, while demonstrating innovation, encountered significant challenges. The IBM PCjr, for instance, featured a revolutionary design that allowed for easy updates through components packaged as plug-in cartridges. The Panda Project's Archistrat 4s server utilized a passive backplane, enabling the easy addition or removal of components to expand functionality, aiming for simpler maintenance in office environments. Similarly, the IBM MetaPad explored the idea of a core computing unit, about the size of a cigarette pack, that could be placed in a laptop case or docked on a desktop, envisioning an easy upgrade path for the core technology. However, these early endeavors often faced hurdles such as high costs associated with low production volumes, performance limitations stemming from the technological constraints of the time, particularly in areas like thermal management, and a lack of widespread industry standards to ensure compatibility and drive down costs. These historical experiences underscore the importance of addressing these pitfalls for the user's idea of an evolving computer to succeed.

Over time, certain aspects of modularization have become integrated into PC design, such as the evolution of PC form factors like PC/AT and ATX, developed by IBM and Intel respectively. These interface and form factor standards enabled users to configure their own PCs by selecting and installing compatible components like motherboards, power supplies, and expansion cards, representing a foundational level of modularity within electronics. Looking back at the broader history of the computer industry, IBM's System/360 in the early 1960s stands out as a significant early example of modular computer systems. This innovative approach allowed different parts of the computer to be designed by separate, specialized groups working independently, provided they adhered to a predetermined set of design rules. A key advantage of the System/360 was that module designs could be improved or upgraded after the fact without requiring a redesign of the entire system, enabling a form of continuous evolution. The success of IBM's System/360 highlights the critical importance of a well-defined architecture with clear interfaces for a modular system to flourish, fostering both innovation and compatibility. More recently, major players like Intel have shown renewed interest in modular laptop concepts, exemplified by projects like Concept Luna, which aims to make laptop upgrades more affordable and reduce electronic waste through easily replaceable components. This renewed focus from industry leaders suggests that the concept of modular computers remains relevant and potentially viable, especially with advancements in technology and an increasing emphasis on sustainability. Furthermore, the principle of modularity is not confined to computing; it has been successfully applied across various industries, including automobiles, construction, and even consumer electronics, demonstrating its broad applicability and potential benefits. The proven advantages of modular design in diverse domains lend further credence to its potential to revolutionize computer hardware design.

3. Understanding Today's Computer Architecture

To assess the feasibility of a modular and upgradeable computer system, it is crucial to understand the fundamental architecture of modern computers, particularly the roles and interactions of its core components.

Core Components and Communication: At the heart of a modern computer lies the Central Processing Unit (CPU), often referred to as the "brain" of the system, responsible for executing the commands and processes needed for the computer and its operating system. Constructed from billions of transistors, the CPU can have multiple processing cores designed for complex, single-threaded tasks. The basic operation of a CPU involves a cycle of fetching an instruction from memory, decoding it to determine the operation and operands, and then executing the instruction in the appropriate unit, such as the Arithmetic Logic Unit (ALU) for calculations. Key architectural components of a CPU include the Control Unit, which manages instructions and data flow; the Clock, which synchronizes operations; the ALU, which performs calculations; Registers, which provide high-speed temporary storage; Caches, which store frequently used data for faster access; and Buses, which facilitate internal communication. Complementing the CPU is the Graphics Processing Unit (GPU), a specialized processor with many smaller, more specialized cores designed to deliver massive performance by working together on parallel tasks. GPUs excel at highly parallel tasks such as rendering visuals during gameplay, manipulating video data for content creation, and computing results in intensive Artificial Intelligence (AI) workloads. Modern GPUs are equipped with thousands of cores capable of performing calculations simultaneously, and since 2003, they have generally outperformed CPUs in terms of floating-point performance. Both CPUs and GPUs utilize a hierarchical memory structure. CPUs have a small, fast cache memory located close to the cores and larger, slower RAM farther away. GPUs also use on-chip memory like registers and cache for quick access but rely on global memory for larger data storage. The fundamental difference in architecture and optimization between CPUs, designed for complex, single-threaded operations, and GPUs, optimized for parallel processing, presents a significant challenge to the idea of a universal, easily swappable "core processor" that can efficiently handle all types of computational workloads. Different tasks inherently benefit from the distinct strengths of these processing units.

Interconnect Technologies: The communication between the CPU and GPU, as well as other components within a computer, relies on various interconnect technologies. Typically, the CPU and GPU communicate over the PCI Express bus, often utilizing memory-mapped I/O. In this process, the CPU constructs buffers in system memory containing data, commands, and GPU code. It then stores the address of these buffers in a GPU register and signals the GPU to begin execution, often by writing to another specific GPU register. Beyond the CPU-GPU connection, other high-speed interconnect technologies facilitate communication between CPUs, memory controllers, and input/output (I/O) controllers. Intel's QuickPath Interconnect (QPI) and its successor, Ultra Path Interconnect (UPI), along with AMD's HyperTransport and Infinity Fabric, are examples of such technologies designed to provide high bandwidth and low latency for efficient data exchange within the system. The industry also relies on various standardized interconnects, including the ubiquitous Universal Serial Bus (USB) and PCI Express (PCIe), as well as emerging standards like Compute Express Link (CXL) and Universal Chiplet Interconnect Express (UCIe), which aim to address the growing demand for high-bandwidth chip-to-chip communication, particularly for applications in AI and High-Performance Computing (HPC). The complexity and the sheer variety of these current interconnect technologies underscore the significant standardization efforts that would be required to establish a universal interface capable of supporting a wide range of swappable core processors from different manufacturers.

The Role of the Chipset: In traditional computer architecture, the chipset plays a crucial role in managing the data flow between the CPU, memory, and peripheral devices. Historically, the chipset consisted of two main chips: the Northbridge and the Southbridge. The Northbridge acted as a bridge connecting the CPU to high-speed components such as random-access memory (RAM) and graphics controllers, while the Southbridge handled slower-speed peripherals and I/O functions. However, modern computer architecture has seen a trend towards integrating more functionalities, such as memory controllers and PCIe interfaces, directly into the CPU die itself. As a result, the role of the chipset, often now consolidated into a single chip referred to as the Platform Controller Hub (PCH) or Fusion Controller Hub (FCH), has evolved to primarily handle lower-speed I/O operations and communication with the CPU via PCIe. Despite these changes, the chipset remains a vital intermediary and controller within the system. Therefore, dynamically replacing the CPU would likely necessitate a chipset architecture that possesses the flexibility to adapt to different processor types and their specific communication requirements, ensuring seamless integration and operation of the modular system.

4. The Potential of Heterogeneous Computing

The concept of heterogeneous computing, which involves utilizing multiple types of computing cores within a single system, holds significant relevance to the idea of modular and upgradeable computer systems.

Concept and Importance: Heterogeneous computing is characterized by the integration of diverse processing units, such as Central Processing Units (CPUs), Graphics Processing Units (GPUs), Application-Specific Integrated Circuits (ASICs), Field-Programmable Gate Arrays (FPGAs), and Neural Processing Units (NPUs), within a single computing system. The primary advantage of this approach lies in its ability to assign different computational workloads to the processing unit best suited for the task, leading to substantial improvements in both performance and energy efficiency. This paradigm has become increasingly important across various domains, including Artificial Intelligence (AI) and Machine Learning (ML), where the parallel processing capabilities of GPUs and specialized accelerators are crucial for handling complex algorithms and large datasets. Heterogeneous computing also plays a vital role in High-Performance Computing (HPC) for scientific simulations and data analysis, as well as in mobile devices where power efficiency and performance are critical. The demonstrated success of heterogeneous computing in leveraging specialized processors for specific tasks strongly suggests the potential benefits of a modular computer system where users could select and install the most appropriate processor module tailored to their individual needs and intended applications.

Heterogeneous System Architecture (HSA): A significant advancement in the field is the Heterogeneous System Architecture (HSA), a cross-vendor set of specifications aimed at facilitating the seamless integration and collaboration between different types of processing units, particularly CPUs and GPUs, on the same bus with shared memory and tasks. The fundamental goal of HSA is to reduce the communication latency that traditionally exists between disparate processing units and to enhance their compatibility from a programmer's perspective, thereby simplifying the development of applications that can effectively utilize the combined power of heterogeneous processors. Key features of HSA include a unified virtual address space, which allows different processors to share memory and data efficiently by passing pointers, and a heterogeneous task queuing mechanism that enables any core to schedule work for any other. Furthermore, the HSA platform supports a wide range of programming languages, including OpenCL, C++, and Java, making it easier for developers to target heterogeneous systems. HSA represents a notable step towards more tightly integrated heterogeneous systems and could potentially pave the way for future architectures that support even greater ease of interchangeability of processing units.

Examples of Heterogeneous Architectures: Several prominent examples of heterogeneous architectures exist in modern computing. ARM's big.LITTLE architecture, widely used in mobile devices, combines high-performance processor cores ("big") with energy-efficient cores ("LITTLE") on a single chip to balance power consumption and computational performance. Another common example is the System-on-Chip (SoC), an integrated circuit that combines various processing units, such as CPUs, GPUs, Neural Processing Units (NPUs), and Digital Signal Processors (DSPs), onto a single substrate, offering a high degree of integration and efficiency, particularly in mobile and embedded systems. The prevalence of these heterogeneous architectures in a wide range of modern devices underscores the industry's recognition of the advantages of using different types of processors to optimize for specific needs, a concept that aligns directly with the user's vision of a computer system with adaptable processing power.

Challenges in Heterogeneous Computing: While heterogeneous computing offers numerous benefits, it also presents several challenges, particularly in the realm of software development and optimization. Effectively partitioning computational workloads across different types of processors, managing the movement of data between their potentially distinct memory hierarchies and interconnects, and efficiently exploiting parallelism at various levels of granularity are complex tasks. Furthermore, developers must carefully balance trade-offs between performance, energy consumption, accuracy, and reliability, while also striving to ensure the portability and scalability of their software across different heterogeneous platforms. These complexities highlight the potential challenges that the software ecosystem might face in supporting a modular and upgradeable computer system where the underlying processing hardware could change frequently.

5. Technical Hurdles to Dynamic Processor Upgrades

The concept of dynamically adding or replacing core processors in a running computer system presents a multitude of significant technical challenges that need careful consideration.

Operating System Complexity: Modern operating systems are typically designed and optimized for specific processor architectures, such as x86 or ARM. Implementing dynamic changes to the core processor would require the OS to possess the capability to seamlessly recognize, initialize, and manage the newly introduced processor, potentially with a different architecture or instruction set, all while the system remains operational. This would involve addressing considerations for per-CPU data structures that are fundamental to OS operation and ensuring their dynamic update to reflect the change in processing resources. Such a level of on-the-fly hardware adaptation would necessitate substantial advancements in operating system design, moving beyond the current paradigms that typically assume a relatively static set of core processors and often require a system reboot to recognize significant hardware changes.

Hardware Interfaces and Compatibility: Core processors communicate with the motherboard and other system components through highly specific sockets and interfaces. Ensuring compatibility between a potentially diverse range of swappable processor types and a standardized motherboard interface capable of supporting dynamic swapping would be a formidable engineering challenge. This would not only involve the physical connection but also the underlying communication protocols that govern data exchange between the processor and the rest of the system. Furthermore, different processors have varying power consumption characteristics and generate different amounts of heat. A modular system would need sophisticated power delivery mechanisms and thermal management solutions capable of adapting to the requirements of different installed processors to prevent system instability or damage.

Driver Development and Compatibility: Operating systems rely on device drivers, specific software components, to enable communication with hardware components, including the core processor. Dynamically adding or replacing a core processor could potentially necessitate the loading and initialization of new drivers on the fly, without interrupting system operation. Maintaining driver compatibility across a wide array of processor generations, manufacturers, and potentially different underlying architectures would introduce a significant layer of complexity for both operating system vendors and hardware manufacturers. The software ecosystem would need to be highly adaptable to seamlessly accommodate a system with dynamically changing core processing units.

System Stability and Data Integrity: The act of swapping a core processor in a running computer system inherently carries a significant risk of system instability and potential data corruption. Ensuring a smooth and uninterrupted transition during such an operation, while also safeguarding the integrity of data being processed, would require the implementation of robust error handling and recovery mechanisms at both the hardware and software levels. The system would need to gracefully handle the removal of an active processor and the introduction of a new one, ensuring that all ongoing processes are migrated or paused appropriately and that data in volatile memory is not lost or corrupted during the switch.

Hot-Swapping Technology: While the concept of hot-swapping, replacing or adding components to a running system, is well-established for certain peripherals like hard drives and USB devices , it is considerably less common for core processors in general-purpose consumer computers. Server-grade systems sometimes offer hot-swappable CPUs, but these implementations typically come with specific requirements, limitations, and often involve a controlled shutdown and restart of the affected processor socket, rather than a truly seamless, on-the-fly replacement without any interruption. This suggests that current hot-swapping technology for CPUs is not yet mature or widely adopted for the kind of dynamic, user-initiated upgrades envisioned, indicating a need for significant advancements in this area to make it a practical reality for consumer computing.

6. Reconfigurable Computing: A Glimmer of Possibility?

The field of reconfigurable computing offers a potentially relevant perspective on the user's concept of an evolving computer system.

Concept of Reconfigurable Computing: Reconfigurable computing is a computer architecture that aims to bridge the gap between the flexibility of software and the high performance of hardware, often by utilizing flexible hardware platforms such as Field-Programmable Gate Arrays (FPGAs). Unlike traditional microprocessors with fixed functionality, reconfigurable hardware can be adapted and customized during runtime by loading a new circuit configuration, effectively providing new computational blocks without the need to manufacture new chips. This ability to dynamically alter the hardware's functionality to suit specific computational needs aligns with the user's idea of a computer system that can evolve and adapt over time through the replacement or addition of processing units.

Applications of Reconfigurable Computing: Reconfigurable computing has found applications in various demanding fields. In High-Performance Computing (HPC), FPGAs can be used as accelerators to speed up specific tasks and algorithms. Hobbyists and students also utilize affordable FPGA boards for computer emulation, recreating vintage computer systems or implementing novel architectural designs. Furthermore, reconfigurable computing has been employed in specialized areas like codebreaking and in embedded systems where adaptability and performance are critical. The diverse applications of reconfigurable computing highlight its inherent versatility and suggest the potential for future advancements in adaptable hardware that could be relevant to the user's concept.

Challenges and Limitations: Despite its promise, reconfigurable computing also faces several challenges and limitations. Programming and effectively utilizing reconfigurable hardware, particularly FPGAs, can be complex, often requiring specialized hardware description languages (HDLs) and a deep understanding of hardware design principles. Additionally, there can be trade-offs between performance, power consumption, and the granularity of reconfiguration, with fine-grained reconfigurable architectures sometimes exhibiting increased power consumption and delay. While reconfigurable computing demonstrates the potential for hardware to be dynamic and adaptable, current technologies might not yet be readily applicable for seamlessly replacing a general-purpose core processor in a user-friendly manner for typical consumer computing needs.

Adaptable Hardware Research: Ongoing research and development in the area of adaptable hardware, often intertwined with the concept of software-defined hardware, indicates a potential future where hardware can be reconfigured and upgraded more easily. Software-defined hardware aims to create devices that are more programmable and flexible, allowing their functionality to be enhanced or changed through software updates rather than requiring physical hardware replacements. This trend, potentially driven by advancements in Artificial Intelligence (AI) and a growing emphasis on software-centric approaches to hardware design, suggests a future where the core processing capabilities of a computer could potentially be reconfigured or significantly enhanced through software mechanisms, perhaps simplifying the process of upgrading and adapting the system over its lifespan.

7. Weighing the Pros and Cons of Modular Computer Hardware

The concept of modular computer hardware, where core processors and other components can be easily swapped and upgraded, presents a compelling set of potential benefits alongside significant challenges that need to be carefully considered.

Potential Benefits: One of the most significant advantages of a modular computer system is its potential for longevity. Easier upgrades and replacements of individual components, such as the core processor, could extend the usable lifespan of a computer, reducing the frequency with which entire systems need to be replaced and thereby contributing to a reduction in electronic waste. This modularity could also lead to greater cost-effectiveness over time. Instead of purchasing an entirely new computer to gain access to a faster processor or other improved hardware, users could potentially upgrade individual modules at a fraction of the cost. Furthermore, a modular design would offer enhanced customization. Users could tailor their systems to their specific needs and budgets by selecting different types of processors, perhaps choosing a more powerful CPU for demanding tasks or a more energy-efficient one for everyday use, or by adding specialized modules as required. The extended lifespan and upgradability inherent in modular designs directly contribute to reduced e-waste, aligning with growing environmental concerns. Maintenance and repair could also be simplified, as faulty modules could be easily identified and replaced, potentially reducing downtime and the need for specialized repair services. The flexibility and scalability of a modular system would allow users to adapt to changing computing needs by adding or upgrading specific modules as technology evolves or their requirements change. Finally, a modular approach could foster innovation in hardware design by making it easier and less expensive for manufacturers to experiment with new designs and functionalities in individual modules without the need to overhaul an entire computer system.

Potential Challenges: Despite these compelling benefits, the implementation of modular computer hardware with dynamically swappable core processors faces several significant challenges. Ensuring software compatibility across a potentially wide range of different processor types and architectures with existing operating systems and applications would be a complex undertaking. Similarly, the development and maintenance of drivers required for the operating system to communicate effectively with a diverse set of swappable processors would present a considerable challenge. Managing the complexity of diverse hardware configurations within a single system, where the core processor could be changed by the user, would also require sophisticated operating system support and could potentially lead to user confusion. A fundamental prerequisite for a successful modular system is the establishment of industry-wide standards for interfaces, communication protocols, power delivery, and thermal management to ensure compatibility and interoperability between modules from different manufacturers. The initial cost of individual high-performance modular components might also be a barrier to widespread adoption. Furthermore, the interconnect performance between swappable processors and other system components could become a bottleneck, limiting the overall performance gains from upgrades. The physical act of frequently swapping modules could also lead to wear and tear on connectors, potentially impacting the long-term reliability of the system. In the software domain, modularization can sometimes lead to increased complexity and code volume. Finally, the dynamic addition and removal of core processing units could introduce security considerations that would need to be carefully addressed to prevent vulnerabilities.

Table: Advantages and Disadvantages of Modular Computer Hardware

8. The Role of AI in Managing an Evolving Computer

Artificial Intelligence (AI) has the potential to play a transformative role in managing and optimizing a modular computer system with dynamically changing processing units.

Hardware Resource Management: AI algorithms could be employed to analyze system usage patterns in real time and dynamically allocate available hardware resources, including processing power from different installed processor modules, based on the current demands. Machine learning models could be trained to predict future resource needs based on user behavior and application requirements, allowing the system to proactively adjust the distribution of workloads across the available processors for optimal performance. Furthermore, AI could intelligently manage power consumption and thermal profiles of the system by monitoring the active processors and their workloads, making adjustments to ensure efficient operation and prevent overheating.

Dynamic System Optimization: Beyond resource allocation, AI could contribute to the dynamic optimization of the entire computer system. By learning the specific performance characteristics of different installed processor modules, AI algorithms could fine-tune system settings, such as clock speeds and memory timings, to maximize efficiency and responsiveness for the current hardware configuration. Machine learning techniques could also be used to identify potential performance bottlenecks within the modular system and suggest optimal processor configurations or even recommend specific upgrades based on the user's typical tasks and performance goals. Moreover, AI could assist in the complex task of driver management by automatically identifying, recommending, and potentially installing the necessary drivers for newly added processor modules, streamlining the user experience and ensuring proper system functionality.

AI in Hardware Design and Development: The role of AI extends beyond managing an existing modular system to potentially aiding in the very design and development of more efficient and robust modular hardware components in the future. AI-assisted design tools can help engineers explore a wider range of potential hardware architectures for modular processors, optimizing their internal design based on specific performance targets, power efficiency requirements, and manufacturing constraints. AI can also be leveraged for hardware optimization, such as improving the layout of components on a processor module to enhance signal integrity and thermal dissipation, and for accelerated testing through simulations, allowing for the early identification and correction of potential design flaws, ultimately contributing to the creation of more reliable and performant modular computer components.

9. Conclusion: Feasibility and the Future of Computer Design

The journey through the history of modular computing reveals a recurring aspiration to create adaptable and upgradeable systems, with notable early attempts in both software and hardware. However, the intricacies of current computer architecture, characterized by complex interconnect technologies and tightly integrated components, present significant technical hurdles to the realization of truly dynamic core processor replacement. Nevertheless, the advancements in heterogeneous computing, which demonstrates the benefits of utilizing specialized processing units, and the ongoing research into reconfigurable and software-defined hardware, which hints at the potential for more adaptable computing resources, offer promising pathways.

Weighing the potential benefits of modular computer hardware – including increased longevity, cost-effectiveness, customization, and reduced e-waste – against the considerable challenges related to software compatibility, driver development, standardization, and system complexity reveals a complex landscape. The successful implementation of such a system would require overcoming significant engineering and logistical obstacles.

The integration of artificial intelligence offers a compelling potential solution for managing and optimizing the complexities of a modular computer with dynamically changing core processors. AI could play a crucial role in intelligent resource allocation, dynamic system optimization, and even in the design and development of future modular hardware components.

In conclusion, while the user's vision of a fully modular and dynamically upgradeable computer with swappable core processors faces substantial technical and logistical challenges based on our current understanding and technologies, the historical drive towards modularity, the continuous advancements in heterogeneous and reconfigurable computing, and the transformative potential of artificial intelligence suggest that it is a concept worthy of continued exploration and development. The ultimate feasibility will likely hinge on the industry's ability to establish comprehensive standards for modular interfaces and to develop operating systems and software ecosystems that can seamlessly and reliably adapt to dynamically changing core hardware configurations, ultimately paving the way for more sustainable and user-centric computing experiences.

THE AUTONOMOUS EVOLUTION:

An AI-Driven Paradigm for Self-Improving Computer Systems

The relentless march of technological progress often leaves a trail of obsolete hardware and software in its wake. The traditional computer model, with its fixed architecture and reliance on external updates, faces an inherent limitation: eventual redundancy. This article explores a radical departure from this model – a theoretical framework for a computer system driven by an autonomous,

continually learning artificial intelligence (AI) capable of rewriting its own operating system and adapting seamlessly to evolving hardware. This paradigm envisions a self-improving machine that transcends obsolescence through continuous evolution.

The Vision: A Self-Sustaining Digital Ecosystem

Imagine a computer where the core software is not static but a dynamic entity, constantly refining itself based on its interactions and the ever-changing landscape of hardware advancements. This system would house an AI at its core, an intelligent agent capable of understanding the intricacies of its own architecture and the potential of new hardware components. Instead of waiting for human-engineered updates, this AI would proactively develop and implement its own software revisions, ensuring optimal performance and compatibility with both existing and newly integrated hardware.

This vision extends beyond mere software updates. The AI would possess the ability to generate necessary drivers for new processors or specialized hardware, effectively overcoming the traditional barriers of hardware-specific software development. Furthermore, to ensure stability and provide a safety net during its evolutionary process, the system would maintain a comprehensive archive of previous operating system versions and configurations. This allows for seamless rollback to a known stable state if any newly implemented upgrades encounter unforeseen issues, ensuring continuous operation.

The ultimate aim of this architecture is to create a computer that never becomes redundant. By continuously learning, adapting, and improving, the system would evolve in tandem with technological advancements, offering a sustainable and perpetually cutting-edge computing experience.

CORE PRINCIPLES OF THE AUTONOMOUS EVOLVING

SYSTEM

Several key principles underpin this theoretical framework:

1. AI-Driven Autonomy: At the heart of the system lies an advanced AI, responsible for managing all aspects of software and hardware integration. This AI would leverage machine learning and deep learning models to understand system performance, identify areas for improvement, and generate the necessary code for upgrades.

2. Modular Hardware Architecture: The physical foundation of the system would be a highly modular design, featuring standardized ports and interfaces that allow for the easy addition or replacement of core components like processors, memory, and specialized accelerators. This modularity provides the AI with the flexibility to integrate new hardware as it becomes available.

3. Continuous Learning and Adaptation: The AI would engage in a continuous learning process, analyzing system performance data, monitoring hardware capabilities, and even researching emerging technologies to inform its upgrade strategies. This iterative process of learning and adaptation would drive the system's evolution.

4. Intelligent Backup and Recovery: To mitigate the risks associated with autonomous upgrades, the system would implement a sophisticated backup and archiving mechanism. The AI would maintain snapshots of previous operating system versions and hardware configurations, enabling rapid rollback in case of any instability.

5. Hardware Abstraction Layer: A crucial software layer would abstract the underlying hardware from the core operating system and applications. This abstraction would allow the AI to implement upgrades and changes without requiring a complete rewrite of the entire software ecosystem.

THE ROLE OF AI: ARCHITECT, ENGINEER AND GUARDIAN

The AI within this system would fulfill multiple critical roles:

- Software Architect: The AI would be responsible for designing and implementing upgrades to the operating system and system software. This includes optimizing performance, enhancing security, and adding new features to leverage the capabilities of the evolving hardware.

- Driver Engineer: When new hardware components are introduced, the AI would autonomously generate the necessary drivers to ensure seamless integration and communication with the rest of the system. This eliminates the reliance on third-party driver support and allows for immediate utilization of new hardware.

- System Optimizer: Through continuous monitoring and analysis, the AI would identify bottlenecks and inefficiencies within the system. It would then implement targeted upgrades and optimizations to maximize performance and resource utilization across the diverse hardware landscape.

- Backup Administrator: The AI would manage the backup and archiving process, ensuring that critical system states are preserved and readily available for restoration if needed. This proactive approach safeguards the system against potential issues arising from autonomous upgrades.

OVERCOMING LIMITATIONS AND EMBRACING THE FUTURE

The concept of an autonomous, self-improving computer system presents a compelling vision for the future of computing. While significant technical challenges exist in realizing such a system, the potential benefits are immense. By overcoming the limitations of traditional computer architecture and embracing the power of AI, we can envision a future where technology evolves seamlessly, eliminating the cycle of planned obsolescence and ushering in an era of truly

sustainable and perpetually cutting-edge computing. This paradigm shift could revolutionize how we interact with technology, fostering a dynamic and ever-improving digital ecosystem.

SOURCES:

alexomegapy.com

Modular Programming: Benefits, Challenges, and Modern Applications - Omegapy

astrodynetdi.com

Modular Power vs Customized Designs: The Ideal Power Supply - Astrodyne TDI

novedge.com

Design Software History: The Evolution of Modular Design Software Architectures: From Monolithic Systems to Adaptive Solutions in Modern Design Practices | NOVEDGE Blog

daily.dev

What is modular programming? - Daily.dev

myemssolutions.com

Pros and Cons of Modular Design for Electronics Products

en.wikipedia.org

Modular programming - Wikipedia

en.wikipedia.org

Modular design - Wikipedia

technewsworld.com

Intel's Modular Concept: It's Time To Rethink Laptop Design - TechNewsWorld

fast-product-development.com

Modular Design of Products, Hardware and Software - Benefits and Disadvantages

tencom.com

The Benefits of Modular Design in Manufacturing - Tencom

modularmanagement.com

All You Need to Know About Modularization

arussell.org

Modularity: An Interdisciplinary History of an Ordering Concept Andrew L. Russell

library.hbs.edu

More Than the Sum of Its Parts: The Impact of Modularity on the Computer Industry | Working Knowledge - Baker Library

designblog.nzeldes.com

The arrival of modular computer design - Commonsense Design - Nathan Zeldes

run.ai

CPU vs. GPU: Key Differences & Uses Explained - Run:ai

community.fs.com

A Brief Introduction to CPU, GPU, ASIC, and FPGA - FS Community

intel.com

CPU vs. GPU: What's the Difference? - Intel

news.ycombinator.com

Ask HN: How does a CPU communicate with a GPU? | Hacker News

en.wikipedia.org

Chipset - Wikipedia

ituonline.com

What Is QuickPath Interconnect (QPI)? - ITU Online IT Training

reeshabh-choudhary.medium.com

Rise of GPUs and GPU architecture | by Reeshabh Choudhary - Medium

baeldung.com

What Is a Chipset? | Baeldung on Computer Science

intel.com

Intel® QuickPath Interconnect

designing-electronics.com

The evolution and future of semiconductor IP interconnect - DENA

en.wikipedia.org

HyperTransport - Wikipedia

en.wikipedia.org

Intel QuickPath Interconnect - Wikipedia

reddit.com

ELI5: CPU chipsets : r/explainlikeimfive - Reddit

intel.com

Interconnect: Intel 6 Pillars of Technology Innovation

reddit.com

I am confused about the whole chipset/CPU PCIe lane interconnect : r/Amd - Reddit

arm.com

www.arm.com

arm.com

What is heterogenous compute? - Arm

supermicro.com

What is Heterogeneous Computing? - Supermicro

en.wikipedia.org

Heterogeneous System Architecture - Wikipedia

en.wikipedia.org

Heterogeneous computing - Wikipedia

medium.com

Heterogeneous Computing Architectures — A Deep Dive into the Future of Computing | by RocketMe Up I/O | Medium

cs.lbl.gov

Heterogeneous Architectures - berkeley lab computing sciences

reddit.com

Radically Different CPUs/Computer Architectures In Production Today? : r/compsci - Reddit

hardforum.com

Specialized Processors are Seemingly Overtaking General Purpose Chips |

[H]ard|Forum

news.ycombinator.com

Why would we want specialized processors when a modern CPU core is filled with d... | Hacker News

druva.com

Exploring ARM-Based SoC & Heterogeneous Compute Architecture - Druva

edgecortix.com

AI Drives the Software-Defined Heterogeneous Computing Era - EdgeCortix

devx.com

Heterogeneous System Architecture - Glossary - DevX

hsafoundation.com

Latest – Heterogeneous System Architecture Foundation

micron.com

Evolving computing system architectures for the future | Micron Technology Inc.

xda-developers.com

5 reasons the future of computing is modular - XDA Developers

youtube.com

Dynamic Page Replacement Algorithms - YouTube

ituonline.com

What Is Hot Swapping? - ITU Online IT Training

pages.cs.wisc.edu

Dynamic Processors Demand Dynamic Operating Systems - cs.wisc.edu

mckinsey.com

Software-defined hardware in the age of AI | McKinsey

en.wikipedia.org

Hot swapping - Wikipedia

datahubanalytics.com

Intelligent Resource Allocation: AI Strategies in Infrastructure Automation

cloud.google.com

What's new with AI Hypercomputer? | Google Cloud Blog

horizontechnology.com

Hot Swapping Drives In The Data Center - Horizon Technology

stackoverflow.com

Does an operating system design for specific processors or all types of processors?

reddit.com

RAM is hot-swappable? : r/talesfromtechsupport - Reddit

primis.ai

PrimisAI: Welcome to the Future of Hardware Design

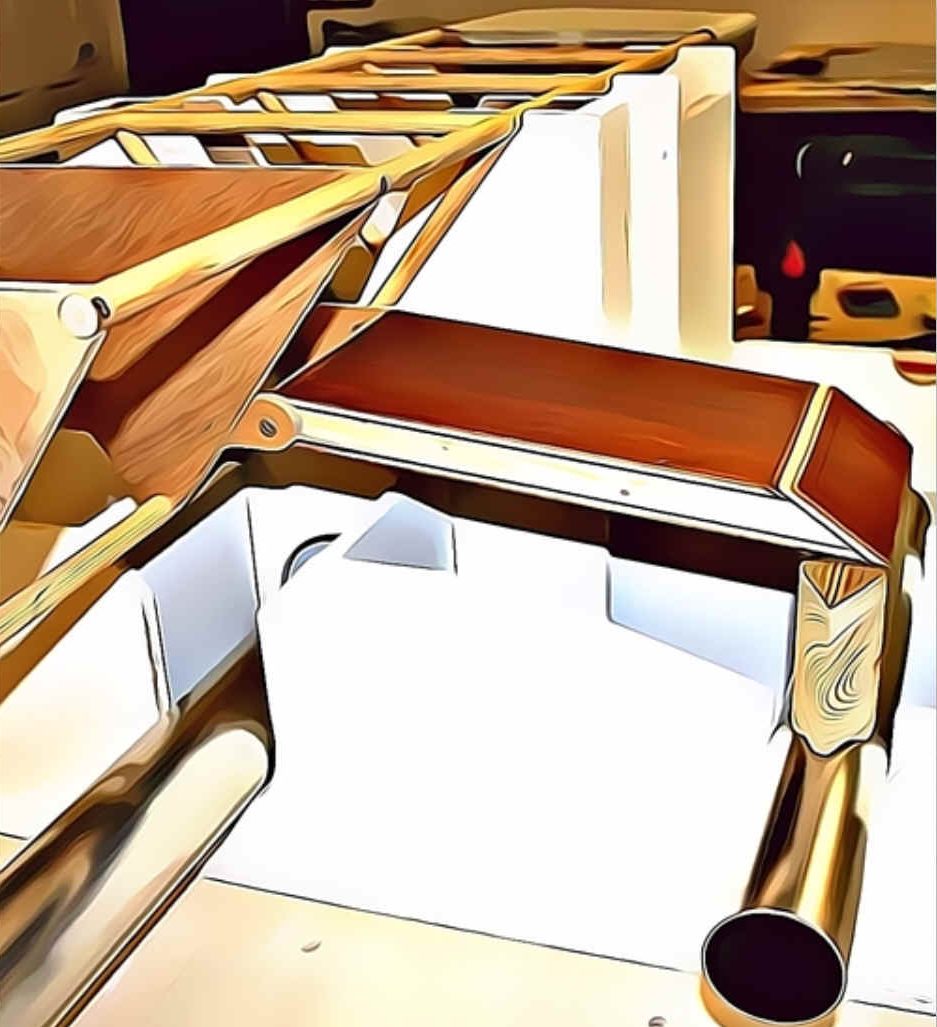

The

Elizabeth Swann is a fast solar

and hydrogen

powered trimaran. One of the Six-Pack round the

world competitors, captained by John

Storm. She

features Hal, the AI supercomputer that self evolves, repairs and

improves.

Key Elements of Your Evolving AI Computer:

Hardware Agnostic Adaptation: The AI would be capable of writing the necessary software and drivers to seamlessly integrate and utilize new or replacement processors, regardless of their specific architecture. This would require a deep understanding of the underlying hardware and the ability to create efficient communication pathways.

Continuous Evolution: The system's evolution would be an ongoing, iterative process, constantly seeking improvements and adapting to new challenges. This mirrors the concept of continuous learning in AI, where models adapt and improve as they encounter new data and experiences.

Robust Backup and Archiving: The AI would maintain backups and archives of older software and hardware configurations, allowing for rollback in case of issues with new upgrades. This ensures system stability and the ability to utilize older, reliable components if necessary.

Never Becoming Redundant: The ultimate goal is a system that perpetually evolves, avoiding obsolescence by continuously upgrading and adapting its hardware and software.

|

CHARACTER

|

DESCRIPTION |

|

|

|

|

ABC

Live News

|

Dominic Thurston, editor,

Australian Broadcasting Corporation |

|

Abdullah

Amir

|

Skipper of Khufu

Kraft, solar boat |

|

Ark,

The

|

DNA

database onboard the Elizabeth Swann |

|

Ben

Jackman

|

Skipper of Seashine, solar

boat |

|

Billy

Perrin

|

Cetacean

expert |

|

Brian

Bassett

|

Newspaper

Editor |

|

Captain

Nemo

|

Autonomous navigation

system, Elizabeth Swann |

|

Charley

Temple

|

Camerawoman & investigative

reporter |

|

Dan

Hawk

|

Electronics Wizard,

World

champion gamer, Computer

hacker & analyst |

|

Dick Ward

|

Editor |

|

Frank Paine

|

Captain Ocean Shepherd |

|

George

Franks

|

Solicitor based in Sydney |

|

Hal

AI

|

Autonomous

AI self learning computer system onboard the Hydrogen

Elizabeth

Swann |

|

Harold

Harker & Todd Timms

|

Sandy Straits Marina,

Hervey Bay, Urangan, Queensland, East Australia |

|

Jean

Bardot

|

French Skipper of Sunriser,

solar boat |

|

Jill Bird

|

BBC

world

service presenter who

is outspoken at times and

tells it like it is |

|

John

Storm

|

Adventurer |

|

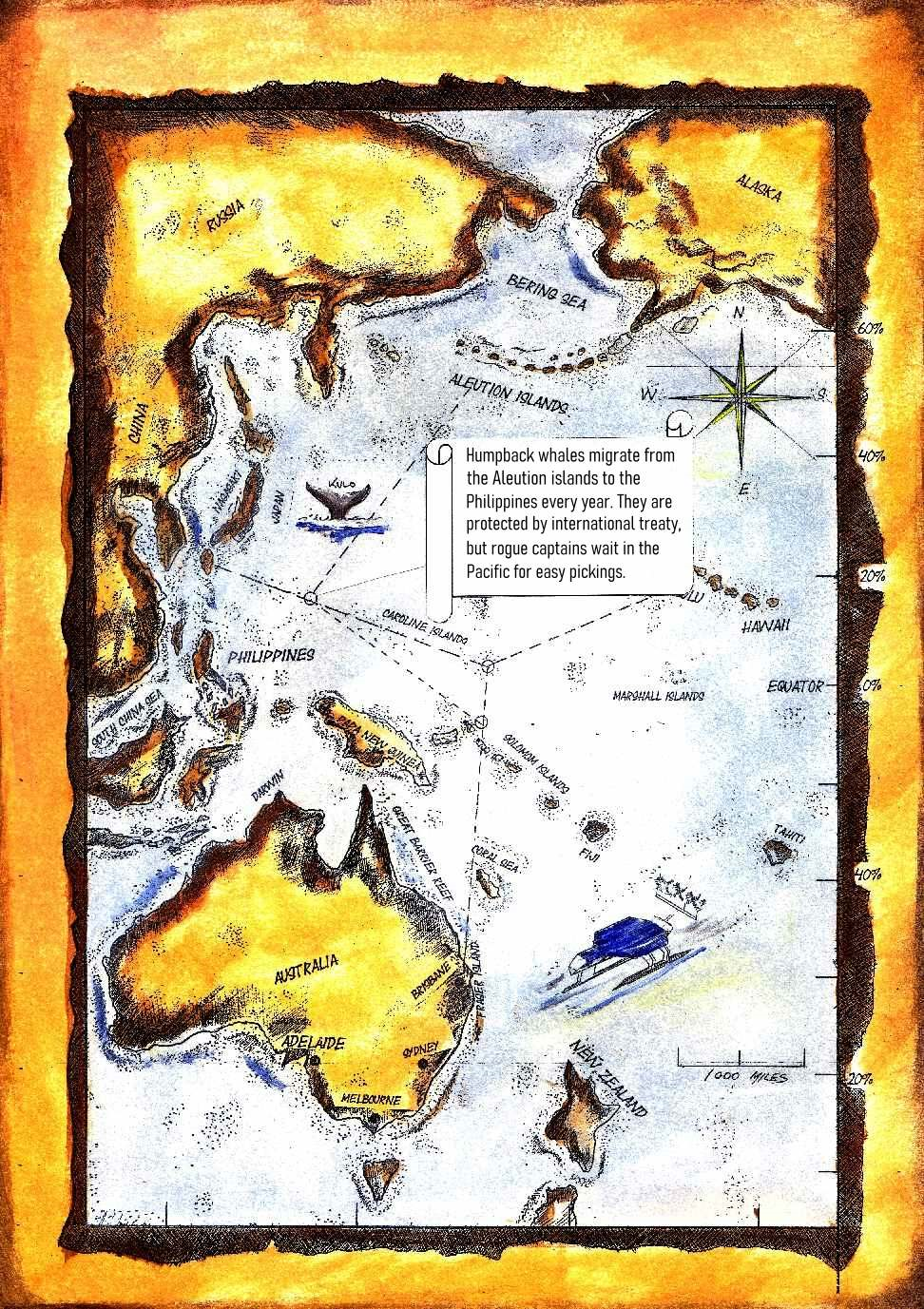

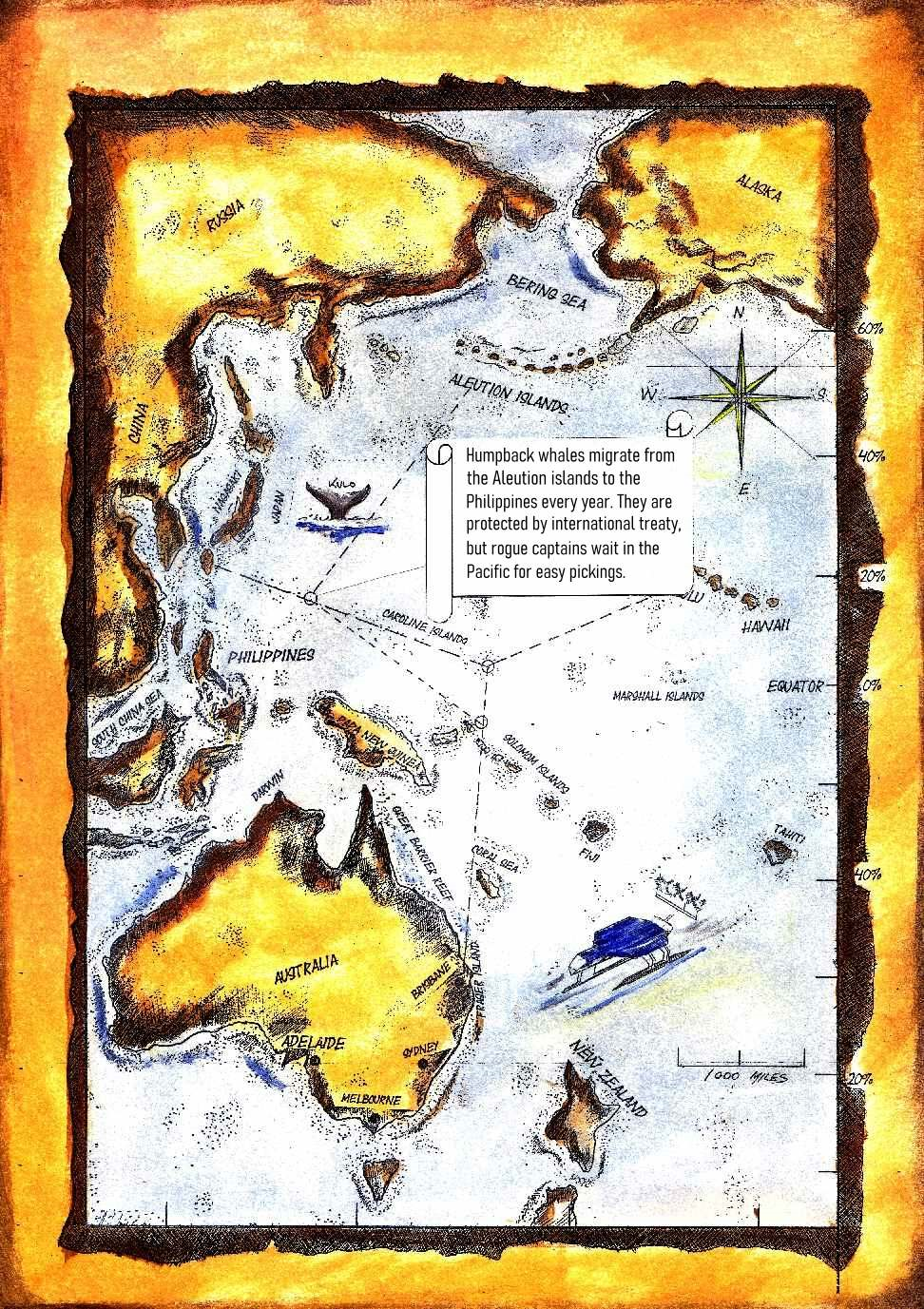

Kana

|

A young female humpback

whale, killed by whalers |

|

Kuna

|

Daughter of Kulo-Luna,

baby calf humpback whale |

|

Kulo

Luna

|

A giant female humpback

whale that sinks two ships |

|

LadBet

International

|

A global gambling network

that prides itself on accepting the most unusual wagers |

|

Lars

Johanssen

|

Skipper of Photon

Planet, a solar powered boat |

|

Peter Shaw

|

Pilot |

|

Professor

Douglas Storm

|

Designer of Elizabeth

Swann & uncle to John Storm |

|

Sand

Island Yacht Club

|

The official start and end

of the Solar Cola Cup: World Navigation Challenge, Honolulu |

|

Sarah-Louise Jones

|

Solar Racer, Starlight |

|

Shui Razor

|

Captain, Suzy Wong,

Japanese whaling Boat |

|

Solar

Cola Cup

|

World Navigation

Challenge, for PV electric powerboats & yachts |

|

Solar

Cola, Spice & Tonic

|

Thirst quenching energy

drinks with vitamins that aid healing and recovery |

|

Suki Hall

|

Marine Biologist |

|

Stang Lee

|

Captain, Jonah, Japanese

whaling Boat |

|

Steve Green

|

Freelance Reporter |

|

Suzy

Wong

|

A Japanese

whaling boat, spectacularly sunk by a whale |

|

Tom

Hudson

|

Sky News Editor |

|

Zheng Ling

|

Japanese Black Market Boss |

Please use our

A-Z INDEX to

navigate this site